Pipeline Templates

Pipeline Templates provide quick-start configurations for common streaming use cases. Templates include pre-configured source schemas, SQL queries, and pipeline settings that you can customize before deployment.

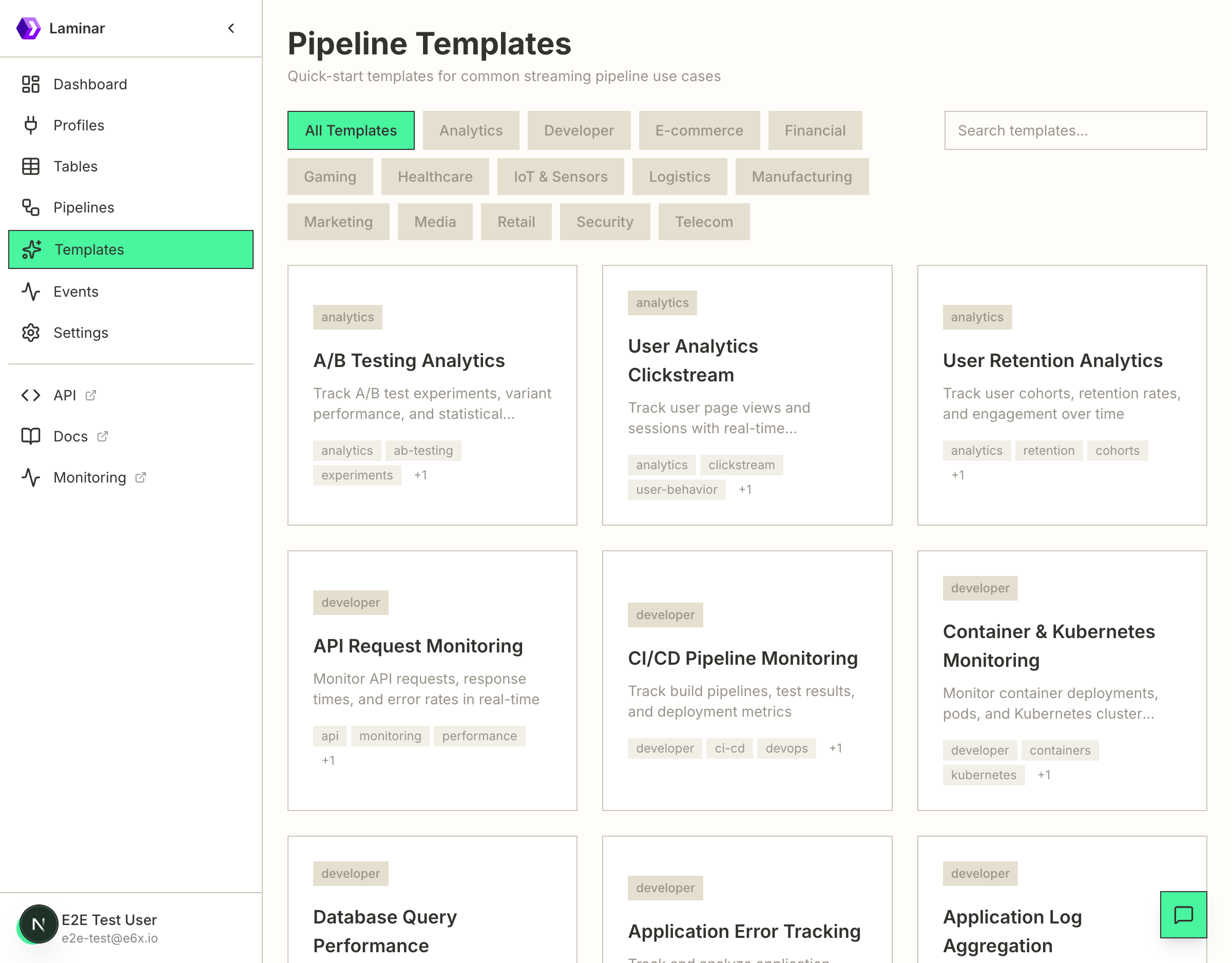

Templates List

The Templates page displays all available pipeline templates organized by category. You can filter templates by category (Analytics, Developer, E-commerce, etc.) or search for specific templates.

Template Categories

- Analytics - A/B testing, user analytics, retention tracking

- Developer - API monitoring, CI/CD pipelines, error tracking, log aggregation

- E-commerce - Cart abandonment, order processing, recommendations

- Financial - Fraud detection, transaction monitoring

- Gaming - Player tracking, leaderboards, matchmaking

- Healthcare - Patient monitoring, appointments, medication tracking

- IoT & Sensors - Device monitoring, sensor data processing

- Logistics - Fleet management, shipment tracking, warehouse operations

- Manufacturing - Production monitoring, equipment maintenance

- Marketing - Campaign analytics, customer segmentation

- Media - Content streaming, ad impressions, social engagement

- Retail - POS transactions, inventory management, loyalty programs

- Security - Access control, intrusion detection, vulnerability scanning

- Telecom - CDR processing, network monitoring

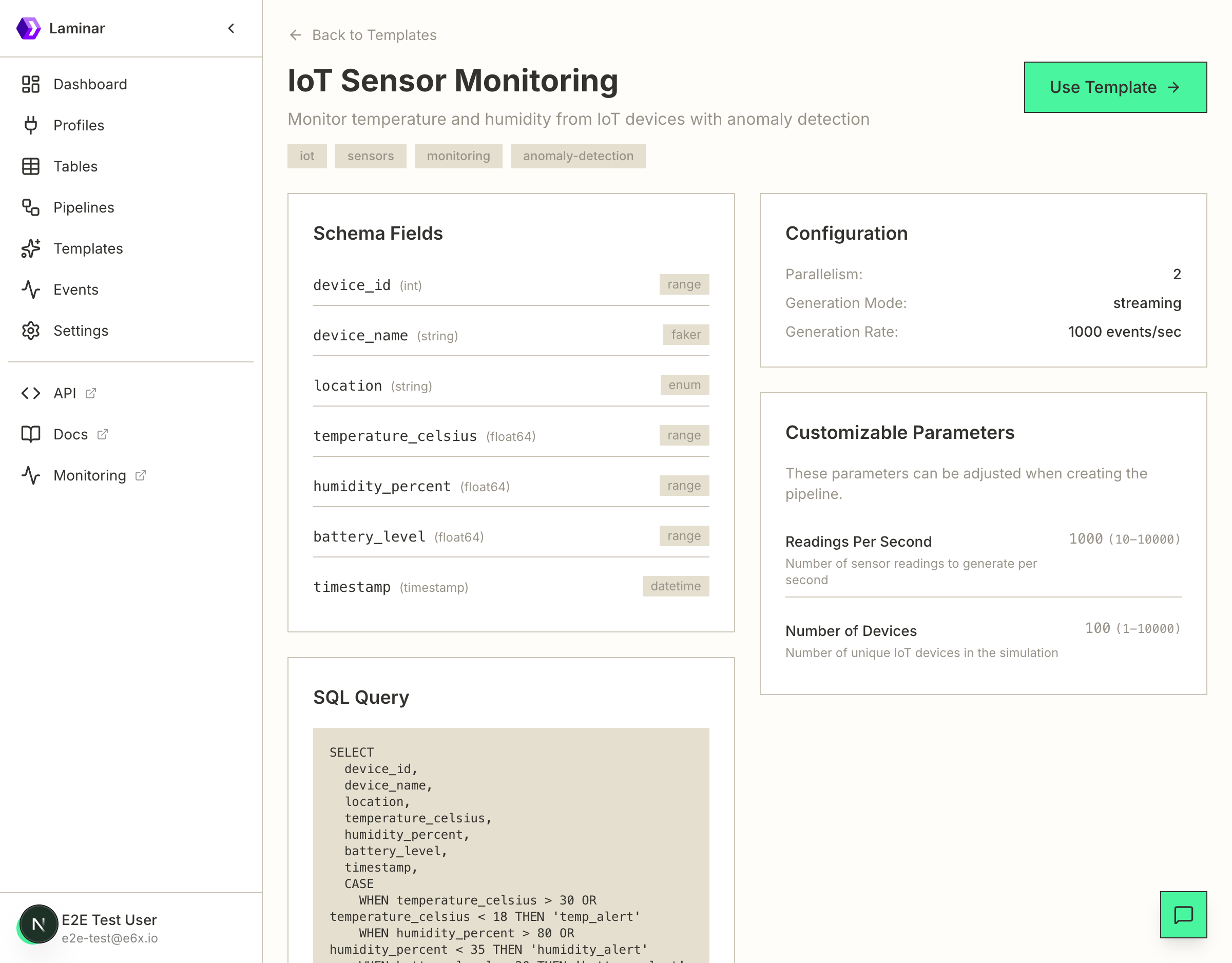

Template Details

Click on any template to view its details including the schema fields, SQL query, and customizable parameters.

Template Information

Each template includes:

- Schema Fields - The data fields that will be generated or expected, with their types and generators

- SQL Query - The transformation query that processes the streaming data

- Configuration - Default settings like parallelism and generation rate

- Customizable Parameters - Values you can adjust when creating the pipeline

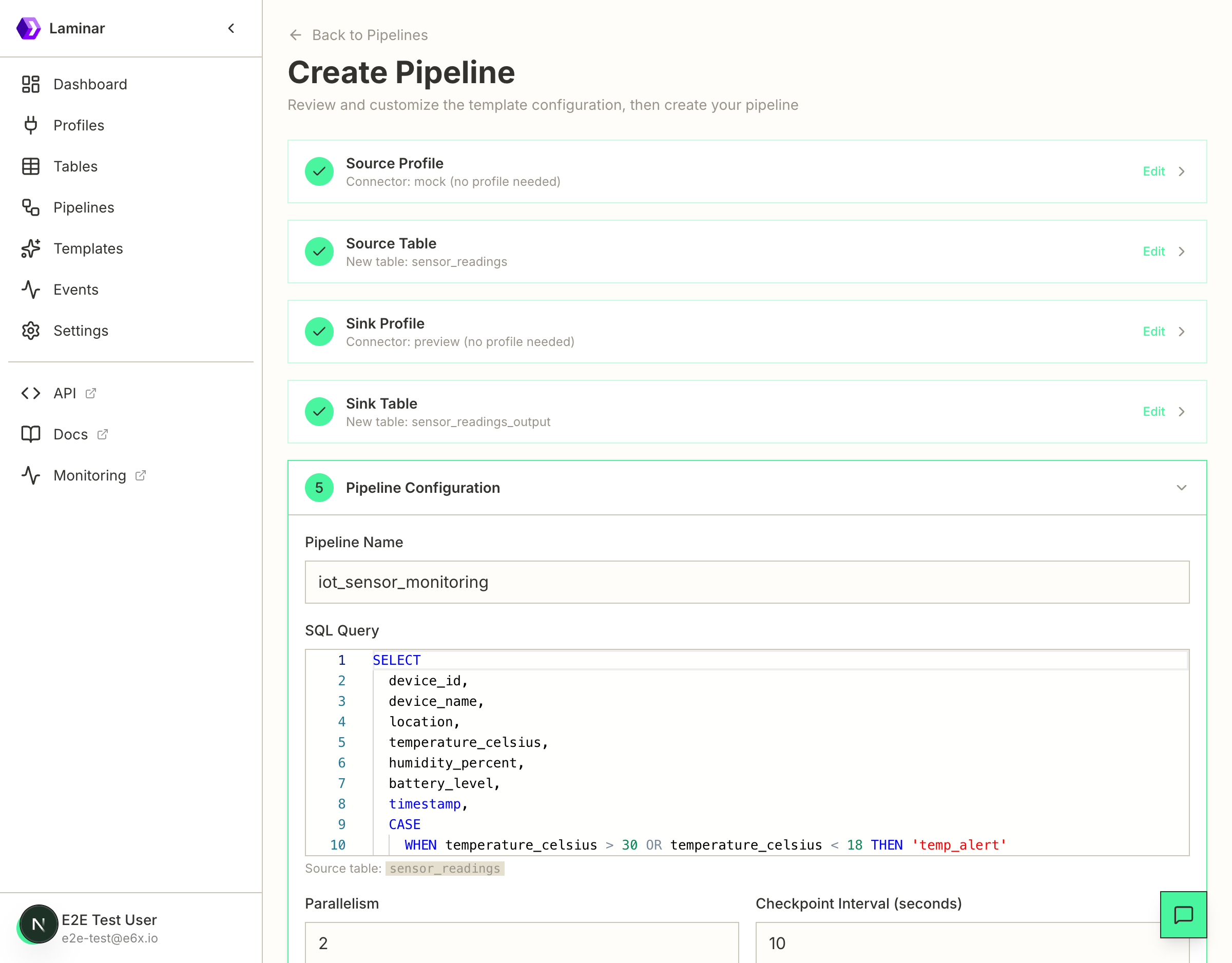

Creating a Pipeline from a Template

Click Use Template to start the pipeline creation wizard with the template's configuration pre-filled.

Configuration Steps

The wizard guides you through:

- Source Profile - The connector type for input data (e.g., mock for testing)

- Source Table - The table that provides input data

- Sink Profile - The connector type for output data (e.g., preview for testing)

- Sink Table - The table that receives processed data

- Pipeline Configuration - Name, SQL query, parallelism, and checkpoint interval

You can edit any of these settings before creating the pipeline.

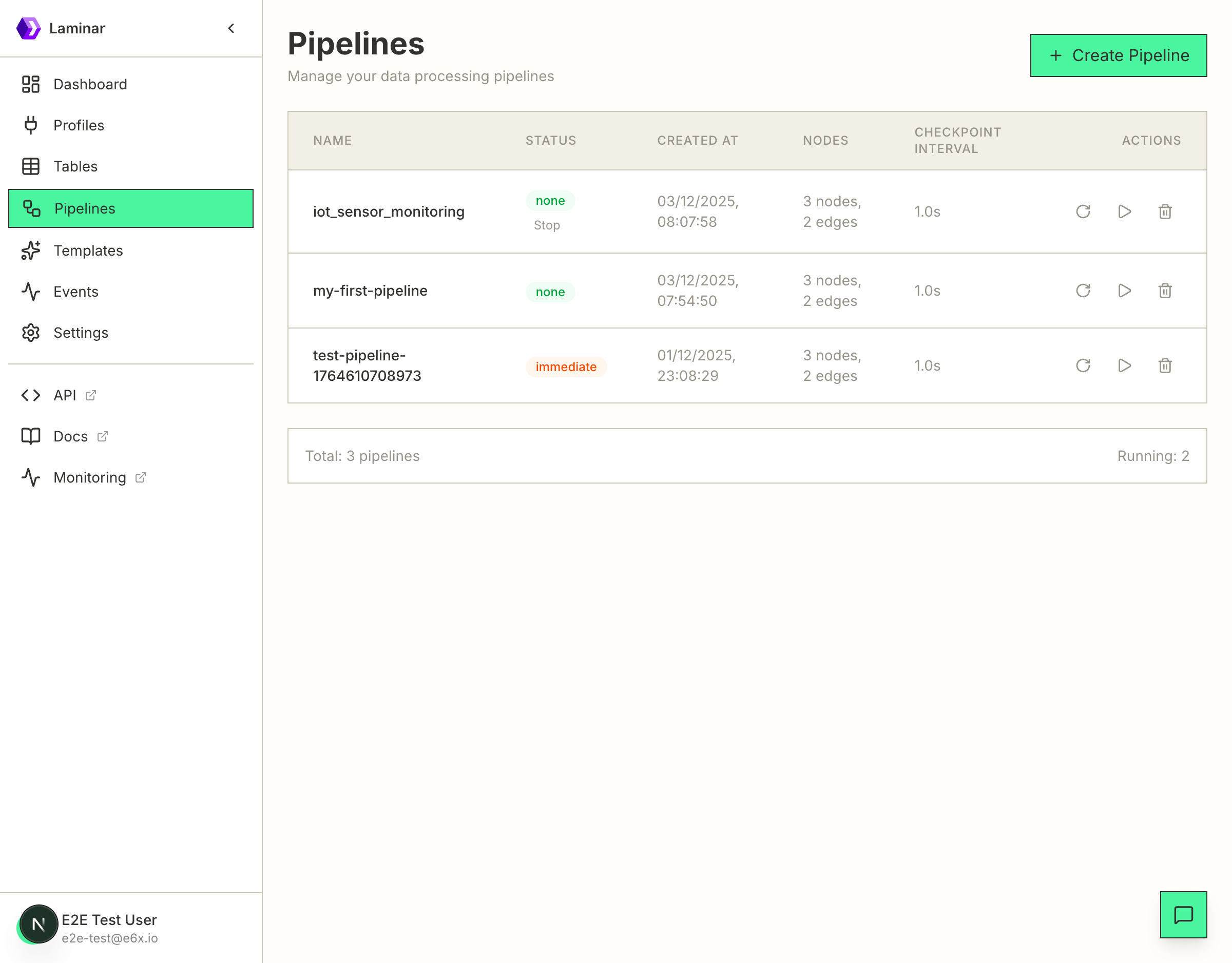

Pipeline Created

After clicking Create Pipeline, you're redirected to the Pipelines list showing your newly created pipeline.

The pipeline starts automatically and you can see its status in the list.

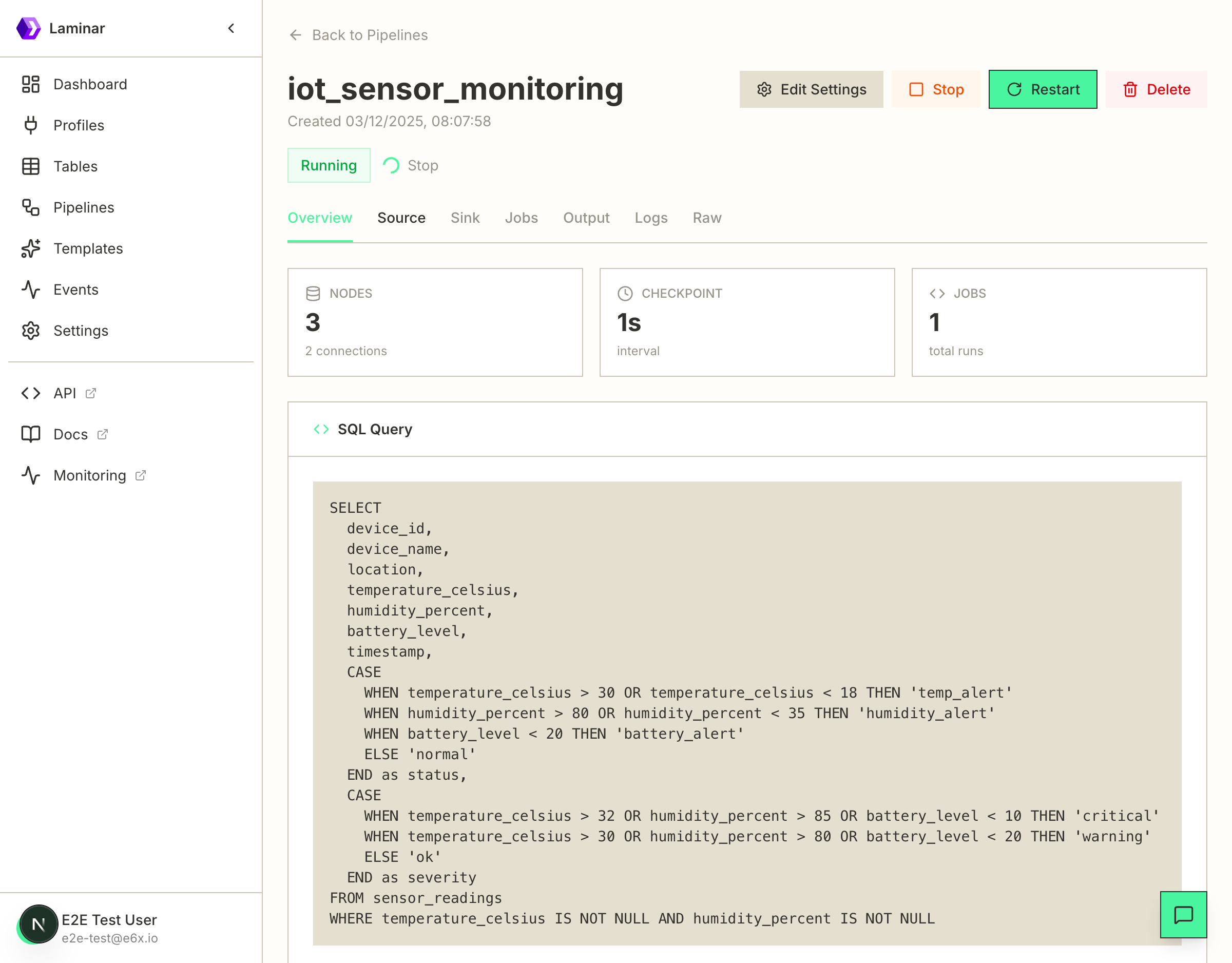

Pipeline Details

Click on a pipeline name to view its details, including the SQL query, configuration, and real-time metrics.

Pipeline Tabs

- Overview - Summary of nodes, checkpoint interval, and SQL query

- Source - Source table configuration and schema

- Sink - Sink table configuration and schema

- Jobs - History of pipeline runs

- Output - Real-time stream output preview

- Logs - Pipeline execution logs

- Raw - Raw pipeline configuration JSON

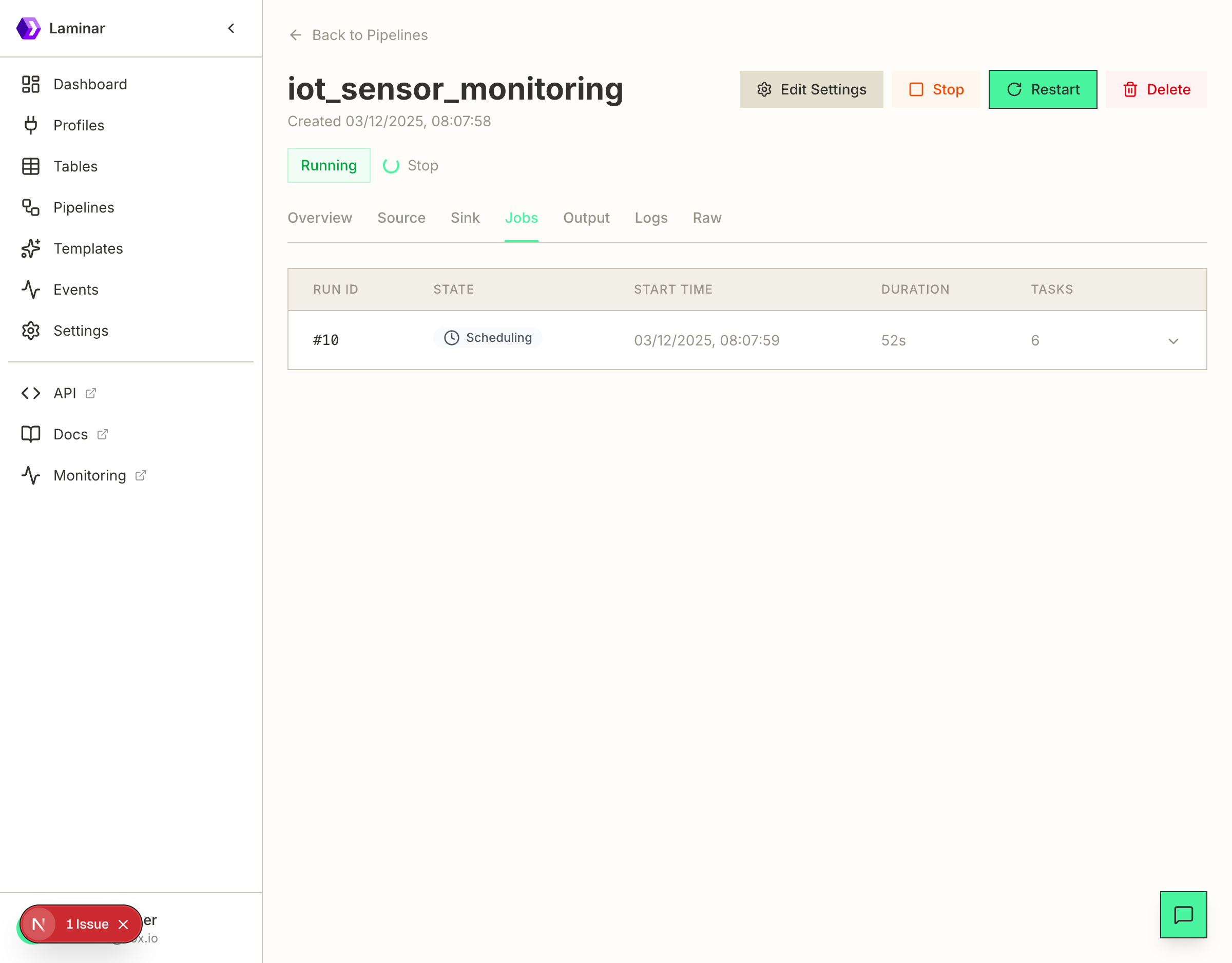

Monitoring Jobs

The Jobs tab shows all pipeline runs with their status, start time, duration, and task count.

Job States

- Scheduling - Job is being scheduled on workers

- Running - Job is actively processing data

- Completed - Job finished successfully

- Failed - Job encountered an error

Next Steps

- Learn about creating custom pipelines

- Explore SQL functions for data transformation

- Set up production connectors for real data sources